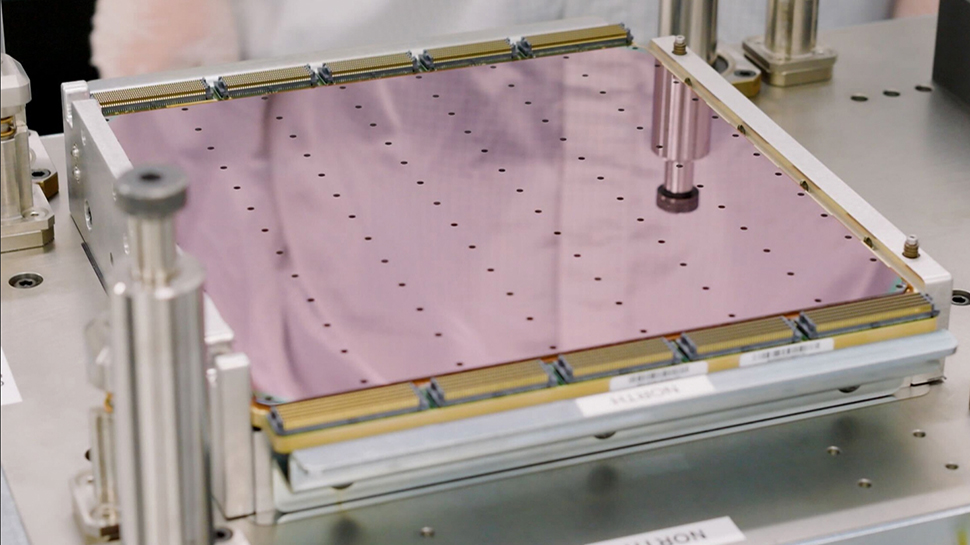

Cerebras Systems has unveiled its Wafer Scale Engine 3 (WSE-3), dubbed the “fastest AI chip in the world.”

The WSE-3, which powers the Cerebras CS-3 AI supercomputer, reportedly offers twice the performance of its predecessor, the WSE-2, at the same power consumption and price.

The chip is capable of training AI models with up to 24 trillion parameters, a significant leap from previous models.

CS-3 supercomputer

The WSE-3 is built on a 5nm TSMC process and features 44GB on-chip SRAM. It boasts four trillion transistors and 900,000 AI-optimized compute cores, delivering a peak AI performance of 125 petaflops – that’s the theoretical equivalent to about 62 Nvidia H100 GPUs.

The CS-3 supercomputer, powered by the WSE-3, is designed to train next-generation AI models that are 10 times larger than GPT-4 and Gemini. With a memory system of up to 1.2 petabytes, it can reportedly store 24 trillion parameter models in a single logical memory space, simplifying training workflow and boosting developer productivity.

Cerebras says its CS-3 supercomputer is optimized for both enterprise and hyperscale needs, and it offers superior power efficiency and software simplicity, requiring 97% less code than GPUs for large language models (LLMs).

Cerebras CEO and co-founder, Andrew Feldman, said, “WSE-3 is the fastest AI chip in the world, purpose-built for the latest cutting-edge AI work, from mixture of experts to 24 trillion parameter models. We are thrilled for bring WSE-3 and CS-3 to market to help solve today’s biggest AI challenges.”

The company says it already has a backlog of orders for the CS-3 across enterprise, government, and international clouds. The CS-3 will also play a significant role in the strategic partnership between Cerebras and G42, which has already delivered 8 exaFLOPs of AI supercomputer performance via Condor Galaxy 1 and 2. A third installation, Condor Galaxy 3, is currently under construction and will be built with 64 CS-3 systems, producing 8 exaFLOPs of AI compute.

+ There are no comments

Add yours