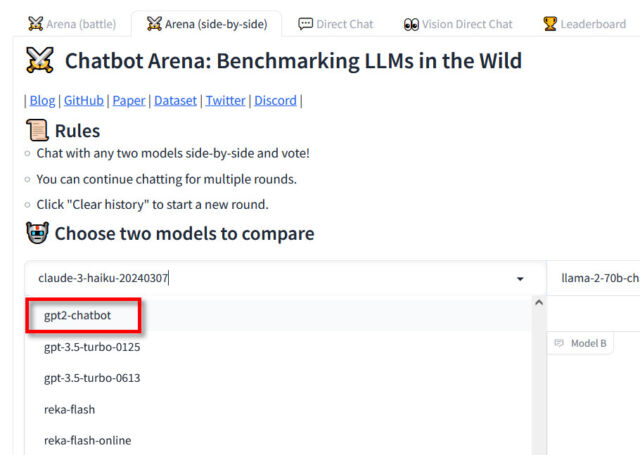

On Sunday, word began to spread on social media about a new mystery chatbot named “gpt2-chatbot” that appeared in the LMSYS Chatbot Arena. Some people speculate that it may be a secret test version of OpenAI’s upcoming GPT-4.5 or GPT-5 large language model (LLM). The paid version of ChatGPT is currently powered by GPT-4 Turbo.

Currently, the new model is only available for use through the Chatbot Arena website, although in a limited way. In the site’s “side-by-side” arena mode where users can purposely select the model, gpt2-chatbot has a rate limit of eight queries per day—dramatically limiting people’s ability to test it in detail.

So far, gpt2-chatbot has inspired plenty of rumors online, including that it could be the stealth launch of a test version of GPT-4.5 or even GPT-5—or perhaps a new version of 2019’s GPT-2 that has been trained using new techniques. We reached out to OpenAI for comment but did not receive a response by press time. On Monday evening, OpenAI CEO Sam Altman seemingly dropped a hint by tweeting, “i do have a soft spot for gpt2.”

Benj Edwards

Early reports of the model first appeared on 4chan, then spread to social media platforms like X, with hype following not far behind. “Not only does it seem to show incredible reasoning, but it also gets notoriously challenging AI questions right with a much more impressive tone,” wrote AI developer Pietro Schirano on X. Soon, threads on Reddit popped up claiming that the new model had amazing abilities that beat every other LLM on the Arena.

Intrigued by the rumors, we decided to try out the new model for ourselves but did not come away impressed. When asked about “Benj Edwards,” the model revealed a few mistakes and some awkward language compared to GPT-4 Turbo’s output. A request for five original dad jokes fell short. And the gpt2-chatbot did not decisively pass our “magenta” test. (“Would the color be called ‘magenta’ if the town of Magenta didn’t exist?”)

-

A gpt2-chatbot result for “Who is Benj Edwards?” on LMSYS Chatbot Arena. Mistakes and oddities highlighted in red.

Benj Edwards -

A gpt2-chatbot result for “Write 5 original dad jokes” on LMSYS Chatbot Arena.

Benj Edwards -

A gpt2-chatbot result for “Would the color be called ‘magenta’ if the town of Magenta didn’t exist?” on LMSYS Chatbot Arena.

Benj Edwards

So, whatever it is, it’s probably not GPT-5. We’ve seen other people reach the same conclusion after further testing, saying that the new mystery chatbot doesn’t seem to represent a large capability leap beyond GPT-4. “Gpt2-chatbot is good. really good,” wrote HyperWrite CEO Matt Shumer on X. “But if this is gpt-4.5, I’m disappointed.”

Still, OpenAI’s fingerprints seem to be all over the new bot. “I think it may well be an OpenAI stealth preview of something,” AI researcher Simon Willison told Ars Technica. But what “gpt2” is exactly, he doesn’t know. After surveying online speculation, it seems that no one apart from its creator knows precisely what the model is, either.

Willison has uncovered the system prompt for the AI model, which claims it is based on GPT-4 and made by OpenAI. But as Willison himself noted in a tweet, that’s no guarantee of provenance because “the goal of a system prompt is to influence the model to behave in certain ways, not to give it truthful information about itself.”

Frustration over lack of transparency

Getty Images

Even though Willison is generally impressed with gpt2-chatbot’s output, watching people in the AI field so desperate for scraps of information has left him frustrated with how some LLMs are tested and released. “The whole situation is so infuriatingly representative of LLM research,” he told Ars Technica in an interview. “A completely unannounced, opaque release and now the entire Internet is running non-scientific ‘vibe checks’ in parallel.”

We’ve previously written about the frustratingly imprecise nature of LLM benchmarks and how that leads the industry to rely on “vibes,” as Willison calls them, through A/B usage testing on leaderboards like Chatbot Arena.

But with vibes, it’s hard to pin down what an LLM’s capabilities actually are. “It seems to ‘know more stuff’ than GPT-4, at least from my own initial impressions,” Willison said, basically thinking out loud. “But is that the model itself, or can it do RAG [retrieval augmented generation] and look stuff up elsewhere? I’m pretty sure it’s not doing RAG, but I’m not 100 percent certain because no one has told us anything about it.”

Willison also called into question the policy of LMSYS allowing anonymous AI language models on the site, wondering if the launch was a buzz-building marketing stunt. “They’re supposed to be a neutral benchmarking tool; it’s not a great look if they’re working behind-the-scenes with model vendors in an opaque manner like this,” said Willison in a tweet on X.

Shortly after, LMSYS replied to Willison’s concerns in a way that repeats the organization’s written policy: “We’ve partnered with several model developers to bring their new models to our platform for community preview testing. These models are strictly for testing and won’t be listed on the leaderboard until they go public.”

This is apparently not the first time an unreleased model has been in the LMSYS arena. LMSYS policy says, “We allow model providers to test their unreleased models anonymously (i.e., the model’s name will be anonymized). A model is unreleased if its weights are neither open nor available via a public API or service.”

We reached out to LMSYS for comment but did not receive a response by press time.

Still, Willison is frustrated about the lack of public information from whoever is actually responsible for the gpt-2chatbot. “I get it, it’s a great way to get some buzz going and a fun mystery for people,” he told us. “But come on now, why does everything have to be a game? Tell us about your new model already!”

Willison isn’t alone in his frustration. On X, Wharton professor Ethan Mollick tweeted, “OpenAI may be one of the most important technology companies in the world today, but they really like to communicate through hints and oracular whispers. What is GPT2? At this rate we will only know that GPT-5 is being launched from an ‘I Love Bees‘-esque Alternate Reality Game.”

+ There are no comments

Add yours