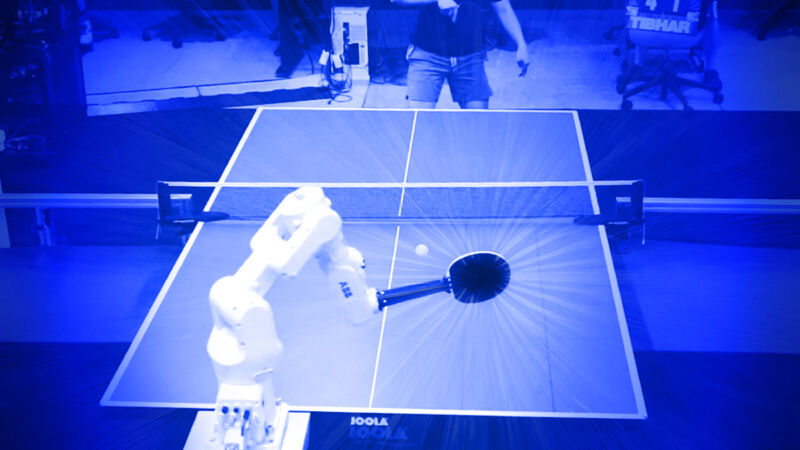

Benj Edwards / Google DeepMind

On Wednesday, researchers at Google DeepMind revealed the first AI-powered robotic table tennis player capable of competing at an amateur human level. The system combines an industrial robot arm called the ABB IRB 1100 and custom AI software from DeepMind. While an expert human player can still defeat the bot, the system demonstrates the potential for machines to master complex physical tasks that require split-second decision-making and adaptability.

“This is the first robot agent capable of playing a sport with humans at human level,” the researchers wrote in a preprint paper listed on arXiv. “It represents a milestone in robot learning and control.”

The unnamed robot agent (we suggest “AlphaPong”), developed by a team that includes David B. D’Ambrosio, Saminda Abeyruwan, and Laura Graesser, showed notable performance in a series of matches against human players of varying skill levels. In a study involving 29 participants, the AI-powered robot won 45 percent of its matches, demonstrating solid amateur-level play. Most notably, it achieved a 100 percent win rate against beginners and a 55 percent win rate against intermediate players, though it struggled against advanced opponents.

A Google DeepMind video of the AI agent rallying with a human table tennis player.

The physical setup consists of the aforementioned IRB 1100, a 6-degree-of-freedom robotic arm, mounted on two linear tracks, allowing it to move freely in a 2D plane. High-speed cameras track the ball’s position, while a motion-capture system monitors the human opponent’s paddle movements.

AI at the core

To create the brains that power the robotic arm, DeepMind researchers developed a two-level approach that allows the robot to execute specific table tennis techniques while adapting its strategy in real time to each opponent’s playing style. In other words, it’s adaptable enough to play any amateur human at table tennis without requiring specific per-player training.

The system’s architecture combines low-level skill controllers (neural network policies trained to execute specific table tennis techniques like forehand shots, backhand returns, or serve responses) with a high-level strategic decision-maker (a more complex AI system that analyzes the game state, adapts to the opponent’s style, and selects which low-level skill policy to activate for each incoming ball).

The researchers state that one of the key innovations of this project was the method used to train the AI models. The researchers chose a hybrid approach that used reinforcement learning in a simulated physics environment, while grounding the training data in real-world examples. This technique allowed the robot to learn from around 17,500 real-world ball trajectories—a fairly small dataset for a complex task.

A Google DeepMind video showing an illustration of how the AI agent analyzes human players.

The researchers used an iterative process to refine the robot’s skills. They started with a small dataset of human-vs-human gameplay, then let the AI loose against real opponents. Each match generated new data on ball trajectories and human strategies, which the team fed back into the simulation for further training. This process, repeated over seven cycles, allowed the robot to continuously adapt to increasingly skilled opponents and diverse play styles. By the final round, the AI had learned from over 14,000 rally balls and 3,000 serves, creating a body of table tennis knowledge that helped it bridge the gap between simulation and reality.

Interestingly, Nvidia has also been experimenting with similar simulated physics systems, such as Eureka, that allow an AI model to rapidly learn to control a robotic arm in simulated space instead of the real world (since the physics can be accelerated inside the simulation, and thousands of simultaneous trials can take place). This method is likely to dramatically reduce the time and resources needed to train robots for complex interactions in the future.

Humans enjoyed playing against it

Beyond its technical achievements, the study also explored the human experience of playing against an AI opponent. Surprisingly, even players who lost to the robot reported enjoying the experience. “Across all skill groups and win rates, players agreed that playing with the robot was ‘fun’ and ‘engaging,'” the researchers noted. This positive reception suggests potential applications for AI in sports training and entertainment.

However, the system is not without limitations. It struggles with extremely fast or high balls, has difficulty reading intense spin, and shows weaker performance in backhand plays. Google DeepMind shared an example video of the AI agent losing a point to an advanced player due to what appears to be difficulty reacting to a speedy hit, as you can see below.

A Google DeepMind video of the AI agent playing against an advanced human player.

The implications of this robotic ping-pong prodigy extend beyond the world of table tennis, according to the researchers. The techniques developed for this project could be applied to a wide range of robotic tasks that require quick reactions and adaptation to unpredictable human behavior. From manufacturing to health care (or just spanking someone with a paddle repeatedly), the potential applications seem large indeed.

The research team at Google DeepMind emphasizes that with further refinement, they believe the system could potentially compete with advanced table tennis players in the future. DeepMind is no stranger to creating AI models that can defeat human game players, including AlphaZero and AlphaGo. With this latest robot agent, it’s looking like the research company is moving beyond board games and into physical sports. Chess and Jeopardy have already fallen to AI-powered victors—perhaps table tennis is next.

+ There are no comments

Add yours