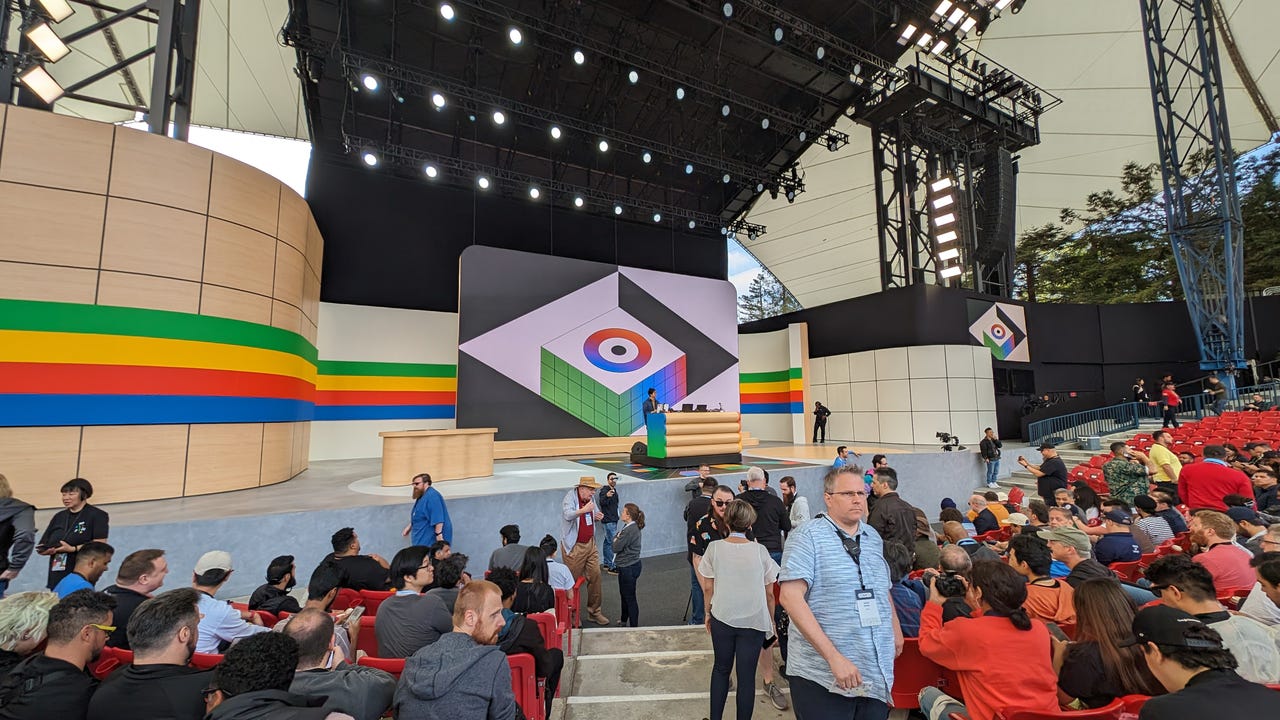

At its annual developer event, Google I/O, Google unveiled many new AI products, features, and upgrades. How many? AI was mentioned 120 times during the two-hour keynote, as CEO Sundar Pichai unabashedly admitted by the end of it. Some of these new offerings provide AI solutions to common problems, while others, although impressive, are unlikely to add much value to our everyday lives. Not mine, at least.

Also: 9 biggest announcements at Google I/O 2024: Gemini, Search, Project Astra, and more

To help you sort through all of the announcements and identify which can positively impact your everyday life, I’ve rounded up what I found to be the most impressive AI features, ranked from most likely to optimize your everyday life to least likely.

1. Ask Photos

This feature was mentioned so briefly during the keynote that you could have easily missed it. However, Ask Photos has the potential to benefit most people by introducing a Gemini chatbot into Google Photos that can help users sort through their photos.

Also: This subtle (but useful) AI feature was my favorite Google I/O 2024 announcement

With the Ask Photos feature, users can describe what photos or content from their album they want to find. Google Photos will find it for them in their camera roll, even packaging together multiple photos when necessary, as seen in the demo below.

On the I/O stage, Google CEO Sundar Pichai gave two examples that showcased the feature’s usefulness. In the first example, a user asked, “What is my license plate number?” Then, Gemini pulled the number, using context to identify which car belonged to the user. In the second, a user who wanted to see photos of their daughter’s progress as a swimmer over time had Gemini automatically package the highlights for them by simply asking it to.

With the amount of photos we take and save daily, this type of assistance in sorting, organizing, and packaging content is extremely helpful. Google shared that the feature will be coming to Google Photos later this summer and even teased that more capabilities are to come.

2. Gmail Q&A feature

This feature was also discussed only briefly near the keynote’s end, making it easy to miss; however, it solves a real-world problem. During the Google Workspace portion of the keynote, the company announced three new features coming to Gmail on mobile, including Gmail Q&A.

As the name implies, the Gmail Q&A feature enables users to chat with Gemini about the context of their emails within the Gmail mobile app, allowing them to ask specific questions about their inbox.

Also: 5 exciting Android features Google just announced at I/O 2024

For example, during the example presented on the Google I/O stage, the user asked Gemini to compare roofer repair bids by price and availability. Gemini was then able to pull the information from several inboxes and display it for the user, as seen in the image below.

Because of my line of work (and my shopping habits), my inbox is flooded with emails daily. Having a tool that can conversationally answer questions about my multiple inboxes on my mobile phone is a game-changer, taking the assistance provided by email AI summarizers to the next level. The feature will be released to Google Lab users in late July,

3. Project Astra/Gemini Live

One of the keynote’s most impressive moments was when Google Deepmind played the video of its Project Astra, which showed an AI voice assistant that can help with visual prompts using the user’s camera, as seen in the video below.

We’re sharing Project Astra: our new project focused on building a future AI assistant that can be truly helpful in everyday life. 🤝

Watch it in action, with two parts – each was captured in a single take, in real time. ↓ #GoogleIO pic.twitter.com/x40OOVODdv— Google DeepMind (@GoogleDeepMind) May 14, 2024

Project Astra is a project from Google DeepMind meant to reshape the future of AI assistants by giving voice assistants awareness of a user’s environment. The project is being infused into Gemini Live, a mobile experience where users can have conversations with Gemini that include the context of their surroundings.

Also: I demoed Google’s Project Astra and it felt like the future of generative AI (until it didn’t)

In the Gemini Live experience, users also can choose from various natural-sounding voices and interrupt them mid-conversation, making these exchanges more natural and intuitive.

Even though users can not take advantage of the entire multimodal experience of Gemini Live yet, with Google adding the full experience later this year, this technology has the potential to transform the voice assistant experience. This leads to my next point.

4. Google Assistant: demoted, not dead

During the event, Google slyly slipped in that Gemini could soon replace Google Assistant as the default AI assistant across Android phones. Despite Google’s subtle mention, this is a huge deal because it will impact Android customers beyond the Pixel user base and how they interact with their voice assistants.

The change is also significant because it should improve the quality of assistance, as Gemini is capable of advanced language processing. The plans for Gemini look promising, with Google sharing that the AI will eventually be overlayed across various services and apps, providing multimodal and on-screen support when requested.

5. Gemini Advanced upgrade to Gemini 1.5 Pro

Google first launched Gemini’s premium subscription tier — Gemini Advanced — in February, providing users with access to Google’s latest AI models and longer conversations. At Google I/O, the company amplified the offerings even further, with one of the biggest upgrades being access to Gemini 1.5 Pro.

Gemini 1.5 Pro gives the public a context window of 1 million tokens. To put that number into perspective, users now can upload documents of up to 1,500 pages, 100 emails, or 96 Cheesecake Factory menus, as Pichai mentioned on stage. Google claims that it is the largest context window of any widely available consumer chatbot.

Also: What does having a long context window mean for an AI model?

Although I don’t think an average user needs this type of window, if you happen to be a superuser who needs assistance with large amounts of data, this added context window is a game changer. Interested users can access Gemini Advanced through the Google One AI Premium plan, which costs $20 monthly once the trial expires.

6. Veo and Imagen 3

At Google I/O, Google launched its most advanced AI text-to-image generator, Imagen 3, and text-to-video generator, Veo. Both offer significant upgrades from their predecessors, with higher-quality outputs and higher fidelity to users’ prompts. The models are being previewed with select creators; to get access to either of these models, interested users have to sign up on a waitlist.

Introducing Veo: our most capable generative video model. 🎥

It can create high-quality, 1080p clips that can go beyond 60 seconds.

From photorealism to surrealism and animation, it can tackle a range of cinematic styles. 🧵 #GoogleIO pic.twitter.com/6zEuYRAHpH— Google DeepMind (@GoogleDeepMind) May 14, 2024

Even though both models look extremely promising and drive forward AI image and video generation, the reason they are ranked toward the bottom of the list is that they don’t seem to add much value to people’s everyday lives or workflow — unless you are a creative professional working with video and image generation every day. For non-creatives, it is a cool tool to have in your back pocket when the opportunity arises.

7. AI Overviews in Google Search

Last up is the AI Overviews feature in Google Search. I placed AI Overviews at the bottom of the list because even though some might find the AI-generated insights at the top of Search results helpful, there was no real need to push it onto all US English-based searchers as the broader rollout seems to be solving an issue that wasn’t there to begin with.

Also: The 4 biggest Google Search features announced at Google I/O 2024

The system Google offered before having to opt-in to the Search Generative Experience (SGE) to access the AI Overviews seemed more useful because you could easily access them if you wanted them, but you didn’t have to if you wanted your search experience to remain unchanged.

+ There are no comments

Add yours